Shelter Scotland was commissioned by the Scottish Government at the end of 2017 to raise awareness of Scotland’s new private residential tenancy to landlords and tenants. The private residential tenancy gives tenants and landlords more rights and responsibilities and more security in their own home.

We knew that the activity that we planned to raise awareness could potentially cause lots of people to have questions about the new tenancy.

Our free national helpline is available 9am to 5pm, Monday to Friday and advice seekers also have the option to speak to someone using live chat – but both of these channels are already under pressure and we receive more calls that we can answer.

It got us thinking, could a chatbot help us alleviate the pressure on our helpline during this activity and in the future?

What exactly is a chatbot?

Say hello to Ailsa our new chatbot!

Ailsa started her life as what’s commonly known in the industry as a frequently asked questions (FAQ) chatbot. You map out all the questions that you think your user will ask, write responses and then organise those responses like a flow chart. You can use “off the shelf” products like ChatFuel to power the questions and answers, or Microsoft provide a bot framework to build a bot that can integrate with your website or with Facebook messenger

We programmed Ailsa to answer the simple questions about the new tenancy, things like:

- What are the changes that are being introduced?

- What happens to existing tenancies?

- How to bring a new tenancy to an end and so on.

The response was pleasantly surprising.

In the 3 months that we ran the campaign, there were over 6500 interactions with Ailsa to find answers.

What was striking for me was that many people are confident using a bot. If you compare that to our live chat, we had around 4000 people use our live chat service over the last year. Granted these were more in-depth queries, but still, the bot did a pretty good job of keeping our lines free for more in-depth and emergency queries.

All things considered, an FAQ bot is pretty limited in what it can answer. It doesn’t allow the user to veer off script, and it also doesn’t take any free text questions.

Ailsa version 2.0

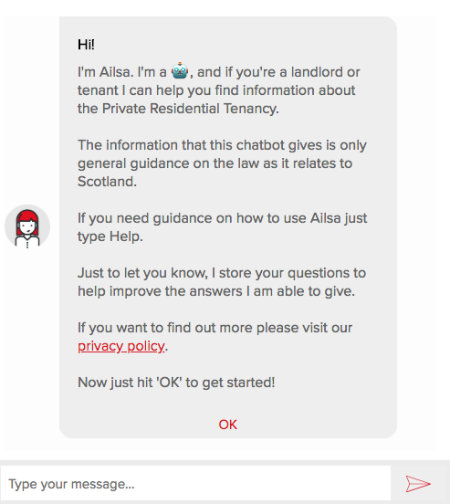

People found the chatbot useful, but we wanted to see if people would respond better to a bot that processed natural language. Users wanted to ask their own questions in their own way which posed its own set of potential pitfalls.

Does someone in Glasgow ask a question the same way as someone in Aberdeen? What about local dialects?

We developed the app using a nodeJS back end which plugs into Microsoft’s Azure bot framework and LUIS as the natural language processing model. There are other models out there, such as IBM Watson which is available for use and closer to home Siri and Alexa which isn’t open for public use.

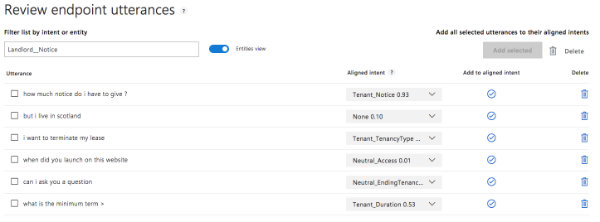

Each question typed by a user gets mapped to an answer in the LUIS. The model does its magic and maps what it thinks the user’s intent was, to an entity in your model. In our case, an entity could be a question related to notice periods or deposits.

The language model does a pretty good job of routing questions to answers and it deals well with misspellings and truncated questions.

The Azure platform also has a nice interface where you can assign questions that the model couldn’t quite figure out, in effect training the model to improve over time.

I don’t think that a chatbot can replace a real person, especially when providing essential advice but it can certainly help answer some of the basic questions your users might have, freeing up time for your advisers to answer more urgent queries.

With this in mind, Ailsa tries to answer the question but if she doesn’t know the answer she does one of three things:

- Checks if we have a live chat operator available if so provide the link to chat

- If a live chat person isn’t available, she’ll check if our helpline is open and provide the number if it is

- If no live help is available, she’ll direct users to our Advice pages.

The importance of usability testing

It’s easy to build your bot and squirrel away showcasing your creation to the world for a “ta-da moment”, but from experience that hardly ever works out as planned. You no doubt have overlooked some obvious mistakes around how your creation performs in the hands of real users.

During our own testing, we squashed some obvious bugs to do with the flow of questions and the placement of buttons, but one thing that surprised me was that some users just wouldn’t engage with a bot.

It’s important to consider your audience before you start planning your bot project.

In the case of one user, they disliked the rise of robots in our world. Another preferred long-form content which covers the topic in its entirety. While they weren’t against using a chatbot, they couldn’t help but think that important information is being left out.

They’re right of course, a bot can only provide bite-sized content, so link out to deeper information if you have it.

The results so far

We’ve launched version 2 in mid-June, so it’s early days, but so far, we’ve had a mix of conversations, some successful and others too complex for Ailsa to deal with. Those that haven’t been successful were redirected to contact us for help via live chat, the phone or our website.

The transcripts also show us when demand for the service is, and so far, we’ve had a few chats outside of our opening hours, which if done right can provide an essential life line to people when there isn’t a real person available.

So what do you think?

Do you think a chatbot can help your organisation deliver a better service? It’s worth considering, but do think about your audience, the supported content available to refer users to, and provide a way for them to reach a real person should they want to.